I’ve been a GNU Linux admin for over ten years now. And one things that most admins loathe is the infamous “Patch Tuesday”. Whilst it’s mostly due to the possibility of new software breaking operability of software, most don’t like having to log into all the servers and running updates. Some tend to forget the primary purpose of applying patches or updates is to address security vulnerabilities. Which is why most patches are normally associated with errata of different categories; i.e. Security errata. If you don’t patch it, it’s not impossible for someone exploit the vulnerability.

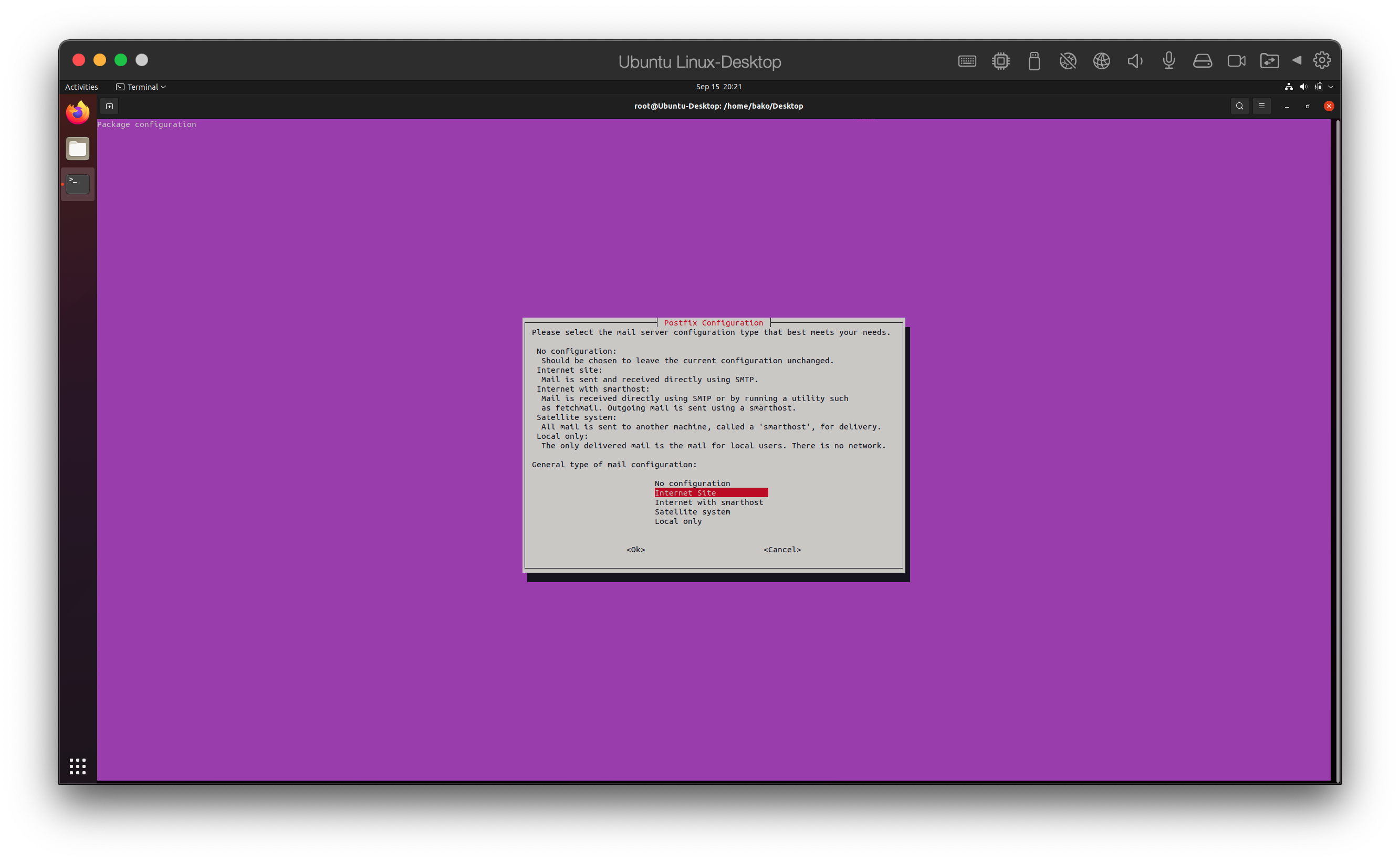

I’m going to assume you obviously know about APT and YUM to apply software installations, updates, upgrades, and removal. So let’s talk about automating the updates so that you don’t have to log into all the servers for it to get patched. For most workstations, you have cron-apt for Debian based systems and yum-cron for Red Hat based systems. Let’s get started with setting up cron-apt. First we’ll run apt install cron-apt and it’ll show this.

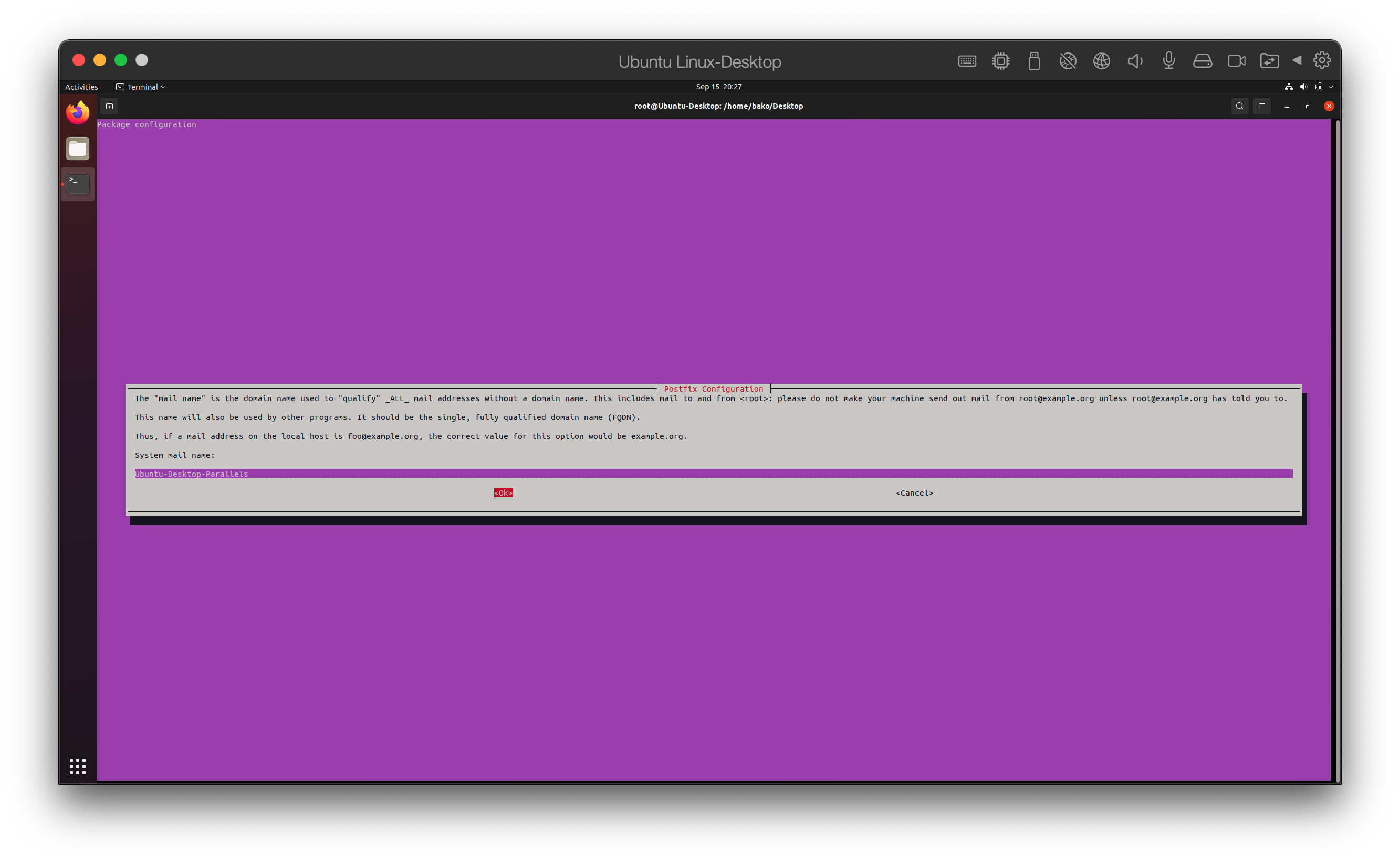

Let’s setup some email notification. Since this is just a VM and not some domain-oriented enterprise work system I’ll use “Ubuntu-Desktop-Parallels” where you would normally put your system FQDN or the domain of the mail system.

Now let’s get dirty. The config for cron-apt is in /opt/cron-apt. Let’s see what we have in there…

root@Ubuntu-Desktop:/etc/cron-apt# find /etc/cron-apt/ -type f

/etc/cron-apt/config

/etc/cron-apt/action.d/0-update

/etc/cron-apt/action.d/3-download

root@Ubuntu-Desktop:/etc/cron-apt# find /etc/cron-apt/ -type d

/etc/cron-apt/

/etc/cron-apt/mailonmsgs

/etc/cron-apt/errormsg.d

/etc/cron-apt/syslogmsg.d

/etc/cron-apt/config.d

/etc/cron-apt/logmsg.d

/etc/cron-apt/action.d

/etc/cron-apt/syslogonmsgs

/etc/cron-apt/mailmsg.d

root@Ubuntu-Desktop:/etc/cron-apt#

Obviously, some googling and MAN pages would get you into detail on what they all do. So let’s take care of the obvious default files. The action.d directory is pretty much apt snippet files that get run in “display” order by the filenames.

root@Ubuntu-Desktop:/etc/cron-apt/action.d# cat 0-update

update -o quiet=2

root@Ubuntu-Desktop:/etc/cron-apt/action.d# cat 3-download

autoclean -y

So the 0-update file will run before the 3-download file meaning a quiet update will be run and then a clean up of the install files. That’s the basic simple stuff without making this post entirely about cron-apt. But you may be wondering how does one control the frequency of the updates? Well, good ol’ fashioned cron.

root@Ubuntu-Desktop:/etc/cron-apt# apt-file list cron-apt

cron-apt: /etc/cron-apt/action.d/0-update

cron-apt: /etc/cron-apt/action.d/3-download

cron-apt: /etc/cron-apt/config

cron-apt: /etc/cron.d/cron-apt

cron-apt: /etc/logrotate.d/cron-apt

cron-apt: /usr/sbin/cron-apt

cron-apt: /usr/share/cron-apt/functions

cron-apt: /usr/share/doc/cron-apt/README.gz

cron-apt: /usr/share/doc/cron-apt/changelog.gz

cron-apt: /usr/share/doc/cron-apt/copyright

cron-apt: /usr/share/doc/cron-apt/examples/0-update

cron-apt: /usr/share/doc/cron-apt/examples/3-download

cron-apt: /usr/share/doc/cron-apt/examples/9-notify

cron-apt: /usr/share/doc/cron-apt/examples/config

cron-apt: /usr/share/man/fr/man8/cron-apt.8.gz

cron-apt: /usr/share/man/man8/cron-apt.8.gz

root@Ubuntu-Desktop:/etc/cron-apt# cat /etc/cron.d/cron-apt

#

# Regular cron jobs for the cron-apt package

#

# Every night at 4 o'clock.

0 4 * * * root test -x /usr/sbin/cron-apt && /usr/sbin/cron-apt

# Every hour.

# 0 * * * * root test -x /usr/sbin/cron-apt && /usr/sbin/cron-apt /etc/cron-apt/config2

# Every five minutes.

# */5 * * * * root test -x /usr/sbin/cron-apt && /usr/sbin/cron-apt /etc/cron-apt/config2

root@Ubuntu-Desktop:/etc/cron-apt#

As you can see it drops something in /etc/cron.d and is set to run cron-apt at 0400 every night. Now let’s move on to yum-cron…. or shall we say dnf-automatic. In case you’re wondering, in short DNF is YUM based but much better and faster. On older systems you might see yum-cron, on newer RHEL 8 based type of systems it’s essentially replaced by dnf-automatic. So, if you want to keep up with the times just mentally replace yum with dnf. Most of the subcommands and options are the same. So after a simple dnf install dnf-automatic you should see what files get added.

[root@rocky-linux yum.repos.d]# dnf repoquery -l dnf-automatic

Last metadata expiration check: 0:12:10 ago on Wed 15 Sep 2021 09:49:51 PM HST.

/etc/dnf/automatic.conf

/usr/bin/dnf-automatic

/usr/lib/python3.6/site-packages/dnf/automatic

/usr/lib/python3.6/site-packages/dnf/automatic/init.py

/usr/lib/python3.6/site-packages/dnf/automatic/pycache

/usr/lib/python3.6/site-packages/dnf/automatic/pycache/init.cpython-36.opt-1.pyc

/usr/lib/python3.6/site-packages/dnf/automatic/pycache/init.cpython-36.pyc

/usr/lib/python3.6/site-packages/dnf/automatic/pycache/emitter.cpython-36.opt-1.pyc

/usr/lib/python3.6/site-packages/dnf/automatic/pycache/emitter.cpython-36.pyc

/usr/lib/python3.6/site-packages/dnf/automatic/pycache/main.cpython-36.opt-1.pyc

/usr/lib/python3.6/site-packages/dnf/automatic/pycache/main.cpython-36.pyc

/usr/lib/python3.6/site-packages/dnf/automatic/emitter.py

/usr/lib/python3.6/site-packages/dnf/automatic/main.py

/usr/lib/systemd/system/dnf-automatic-download.service

/usr/lib/systemd/system/dnf-automatic-download.timer

/usr/lib/systemd/system/dnf-automatic-install.service

/usr/lib/systemd/system/dnf-automatic-install.timer

/usr/lib/systemd/system/dnf-automatic-notifyonly.service

/usr/lib/systemd/system/dnf-automatic-notifyonly.timer

/usr/lib/systemd/system/dnf-automatic.service

/usr/lib/systemd/system/dnf-automatic.timer

/usr/share/man/man8/dnf-automatic.8.gz

[root@rocky-linux yum.repos.d]#

You’ll notice that DNF; a.k.a. the YUM command replacement, has a new config file called “automatic.conf”. Take a peek at that file and you should pay attention to the “upgrade_type” and “apply_updates” settings. By setting the “upgrade_type” to “security” and the “apply_updates” to “yes” you’ll always be updating the packages with security updates. Now to setup the schedule or frequency of how often it’s run. You’ll notice that the package files are pointed to be more SystemD fashioned and that there’s a dnf-automatic.service file and a dnf-automatic.timer file. For those that don’t know, the service files are essentially the replacement of SystemV init scripts and they’re much easier if I might add. The timer files are kind of like cron config files that SystemD uses separate from cron. In the timer file you’ll see an “OnCalendar” line with some kind of unfamiliar datetime format. That datetime format uses the the SystemD.time which is a more “human” form of date specifications. If you lookup the “Calendar Events” format in the SystemD.time documentation it’ll explain to you the format. After that you just need to enable the service and the timer file.

Now your system is automatically. But, if you’ve ever worked in an environment that actually uses Lifecycle Environments you might be asking how do you control what software gets deployed to your systems that’s now automatically updating. I bring you the Red Hat Satellite Infrastructure; which is just a rebranded version of The Foreman, which has “Content Management”. Or you can just setup your own repositories using reposync. After playing around on the Red Hat Satellite stuff, it might be best and easiest to just stand up your own repositories unless you use all of it’s functions. (Stay away from the ISS import/export Content Views for Disconnected Satellites.) Using a Content Management system you’re able to hold more control over what is and is not available to be installed on the systems. For example, some STIGs that I frequently have to deal involve that certain software should not be installed. And if it is installed it needs to be appropriately justified, approved, and documented. An example is the simple, easy, and highly insecure TFTP server package. They also do an assessment of the software installed on the systems to see what patches are available to which systems and what types. The Satellite infrastructure also has abilities to do “jobs” that can be used to reach into all the systems and run updates. It also has the ability to use Ansible, however it does require one to have to invest in setting up an Ansible Tower for facts, plays, playbooks, and all that jazz to run updates.

The point is that one should get one’s system’s patched, tested, and deployed to production as soon as possible. Not just the common once a month update schedule.

You must be logged in to post a comment.